It's my pleasure to post my article with the sole objective to help people who want to get a jet start in BIG DATA world .As this is my first post I dedicate it to my father Mr. Pawan Kumar Khandelwal, the inspiration of my life .

This article will be useful for anyone in the installation of hadoop from scratch without any big data knowledge. Then your are all set to explore the BIG DATA world......

Apache Hadoop is an open-source software framework for storage and large scale processing of data-sets on clusters of commodity hardware and incorporates features similar to those of Google File System (GFS) and of the MapReduce computing paradigm. Hadoop's HDFS is a highly fault tolerant distributed file system and, like Hadoop in general, designed to be deployed on low-cost hardware thus being very cost-effective. It provides high throughput access to application data and is suitable for applications that have large data sets.

Here I am going to explain the process of setting up a single node hadoop cluster with Hadoop Distributed File System underneath...going forward i will extend it to a multi-node cluster in my next post.. So here we go...

I am taking my machine configuration as default...

Requirements

1. Windows 7 OS with 4 GB RAM and 500 GB HDD

2. Oracle VM Virtual Box 4.3.2

3. Hadoop 1.0.3

4. Ubuntu 13.04

5. Daemon Tools Lite 4.48.1.0347

6. Oracle Java 7

7. Internet Connection

Download Links

https://s3-us-west-2.amazonaws.com/blog-singlenodehadoop/ORACLE+VIRTUAL+BOX/VirtualBox-4.3.2-90405-Win.exe1. Oracle VM Virtual Box

2. Hadoop 1.0.3

https://s3-us-west-2.amazonaws.com/blog-singlenodehadoop/Hadoop/hadoop-1.0.3.tar.gz

3. Ubuntu 13.04

https://s3-us-west-2.amazonaws.com/blog-singlenodehadoop/Ubuntu+13.04/ubuntu-13.04-desktop-amd64.iso

4. Daemon Tools

https://s3-us-west-2.amazonaws.com/blog-singlenodehadoop/Daemon+Tools/DTLite4481-0347%5B2%5D.exe

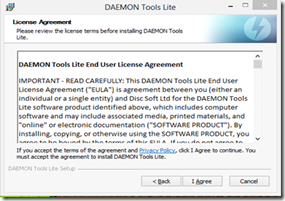

INSTALLING DAEMON TOOLS LITE

1. Download DAEMON TOOLS from the above mentioned link.

2. Run the downloaded setup file.

3. Choose the language as English and click NEXT.

4. You will be prompted for agreeing to the License Agreement, Click on "I Agree".

5. Next it asks for choosing the License type. Choose the Free License and Click NEXT.

6. In the next window , it displays the components to be added in the installation . Leave it to its default and click NEXT.

7. Next , it prompts you to Install Entrusted Search Protect . Select the Express radio button and click NEXT.

8. Then it gives you an offer to install Tune up Utilities for system performance. It's optional . If you want it select the checkbox or deselect it and click NEXT.

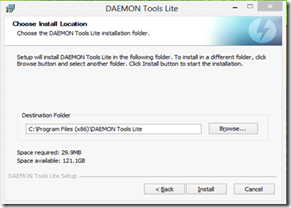

9. Next , select the location in which you want Daemon Tools to be installed. Default is "C:\Program Files (x86)\DAEMON Tools Lite". You can change the location ifyou want and click install.

10. Finally click on CLOSE. Now you have Daemon Tools sucessfully installed on your system.

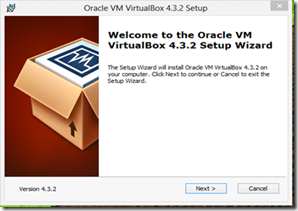

INSTALLING ORACLE VM VIRTUAL BOX

1. Download the Oracle VM Virtual Box from the above mentioned link.

2. Run the downloaded setup file.

3. Click NEXT on the setup wizard.

4. Change the location of installation if required or else leave it as default which is C:\Program Files\Oracle\VirtualBox\ and click NEXT.

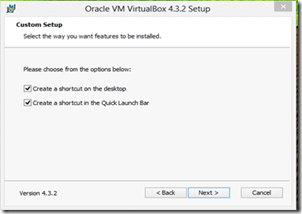

5. Select or Deselect the options to create shortcuts on desktop or launch bar according to your requirements and click NEXT.

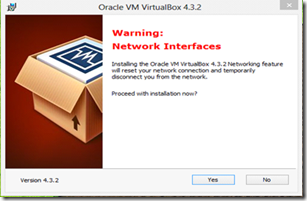

6. Oracle VM Virtual Box networking feature resets your network connection and temporarily disconnect you from the network . When it asks you for a confirmationfor installation, Click “YES”.

7. For review purpose one more level of confirmation comes up. Click on BACK if any changes is required in the previous steps or click INSTALL.

8. While installation it prompts for installing Oracle Corporation Universal Serial Bus . Click on INSTALL.

9. Finally click FINISH. Now you have your Oracle VM Virtual Box sucessfully installed and ready to use.

CONFIGURING A VM

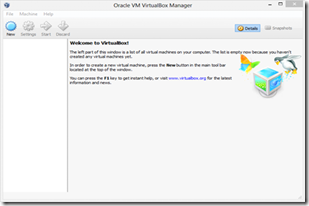

Now we will start with the creation and configuration of VM using our Oracle VM Virtual Box. As our objective for now is to create a single-node hadoop cluster we will create only one VM with the necessary configuration.

1. Run the Oracle VM Virtual Box from your Desktop.

2. Click on NEW to create a new Virtual Machine.

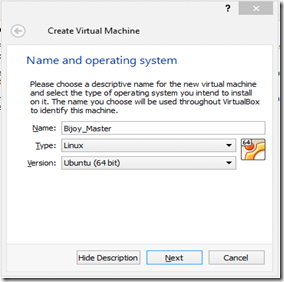

3. Give your VM a name of your Choice. ( I have given Bijoy_Master).

4. Choose the type of Operating System you are going to install in the VM. (Linux).

5. Finally choose the version of the Operating System that is Ubuntu (64 bit) and click NEXT.

6. Now, select the amount of memory (RAM) in megabytes to be allocated to the VM. Select 2 GB for better performance. You can change it according to the RAMavailable in your machine.

7. In the next window select the second radio button , that is " Create a virtual hard drive now" and click NEXT.

8. Select VDI that is VirtualBox Disk Image and click NEXT.

9. Select fixed size Hard disk space for your VM because in Dynamic Allocation the space is occupied when data is stored but it is not freed when the data is released.And Fixed size allocation offers better speed.

Click NEXT.

10. Allocate the amount of Hard Disk space to the VM. Select 30 GB. You can increase or decrease your Hard Disk allocation depending upon your system's hard diskcapacity.

Click CREATE.

11. It may take a few minutes to configure your VM. After completinn on the left side pane of the Oracle VM Virtual Box you can see your VM configured with thespecified configurations.

INSTALLING UBUNTU

1. Download Ubuntu 13.04 from the link mentioned above and save it to your disk in any location.

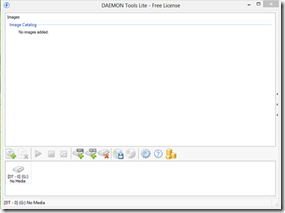

2. Open Daemon Tools and click on the Add Image Tab.

3. Go to the Ubuntu Location and select the Ubuntu image and load it.

4. Click on the added image and click on the Mount button.

5. You will get a notification that you have a CD drive added with UBUNTU image loaded.

6. Click on the Oracle VM VirtualBox and start it.

7. Click on SETTINGS and select STORAGE from the left pane. On the right pane click on Host Drive under Controller IDE. On the extreme right click on the disc imageand choose the drive containing ubuntu image mounted via Daemon Tools. Click on OK. Now you have sucessfully added the disk drive and ready to use the ubuntuimage inside the VM.

8. Now, right click on the configured VM(Bijoy_Master) VM and click on START.

9. When VM starts , it asks you to either TRY UBUNTU or INSTALL UBUNTU. Click on INSTALL UBUNTU and select the language according as per your choice.

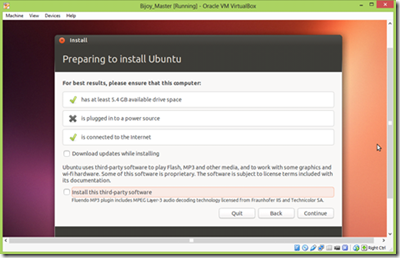

10. Next, it will prompt you to ensure that your VM has at least 5.4 GB available drive space, the machine is plugged to a power source and internet is connected. Afterensuring everything is set, click on CONTINUE.

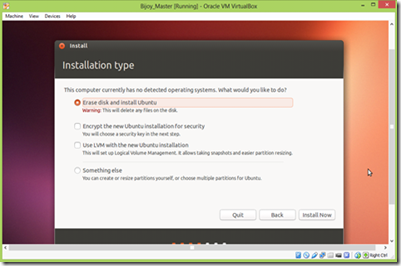

11. In the next window , select ERASE DISK AND INSTALL UBUNTU and click on INSTALL NOW.

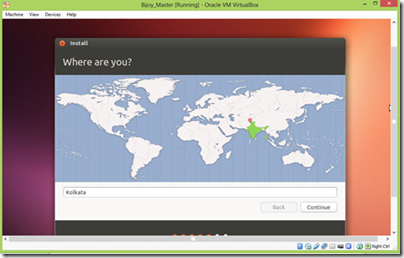

12. In the next window select your city and click on the CONTINUE button.

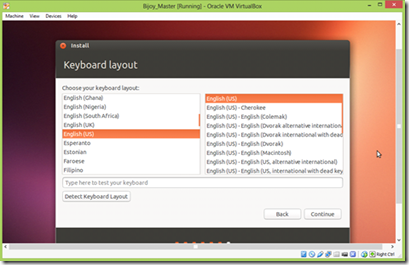

13. Select your keyboard layout as English(US) and click CONTINUE.

14. In the next window fill in your NAME, COMPUTER'S NAME, USERNAME and PASSWORD and click on CONTINUE.

15. The installation starts and will take some time. Click Skip when it start dowloading language packs (if you dont require language packs).

16. Unmount the ubuntu image from DAEMON TOOLS.

17. The Oracle VM Virtual Box will be stopped. Then Start it again and right click on the VM and START.

18. Ubuntu will start . Provide the password that was set during installation and log in to the system.

19. Open the Terminal in Ubuntu.

INSTALLATION OF JAVA

1. For installing Java 7 run the following commands on the terminal(requires internet connection)

When prompted press ENTER to continue.

Now you need to update the apt-get repository with the following command.

Then run the following command for installation.

It promts for confirmation , press Y and hit Enter.

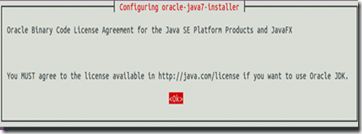

Then it displays 2 windows one by one for accepting the license agreement.

Select OK in the first window and YES in the second window.

If the installation fails and shows an error "dpkg error processing oracle- java7- installer(--configure)" , try changing the PPA.

Before changing the PPA, use PURGE to completely remove the previous unsuccessful installation of Java 7.

Then use the following commands to change the PPA, and install Java 7.

After installation verify the version of Java installed in your VM using the following command.

ADDING A DEDICATED HADOOP USER

We will use a dedicated Hadoop user for running Hadoop. It is not mandatory, but it is advised to keep the other installations separate from the hadoop installation.

For creating a dedicated hadoop user use the following commands.

Group HADOOP is created.

This creates "vijay" as a user under hadoop group.

Remember the password for future reference.

SSH CONFIGURATION

Hadoop requires SSH access to manage its nodes, that is, remote machine plus local machine. For our single-node cluster, configuration of SSH access to localhost forthe user “vijay” is required.

1. First we will generate an SSH key for user "vijay". Change the user to the hadoop user. (vijay)

2. Now generate the SSH key for the hadoop user(vijay) using the following command.

This will create a RSA key pair with a empty password. Generally, using an empty password is not recommended, but in this key it is required to unlock the key withoutyour interaction (you don't want to enter the password everytime Hadoop interacts with its nodes).

3. Now you have to enable SSH access to your local machine with this newly created key. Use the following command for the same.

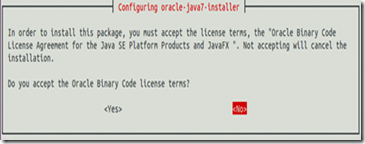

4. The final step is to test the SSH setup by connecting to your local machine with the hadoop user(vijay). The step is also needed to save your local machine's host keyfingerprint to the hadoop user's(vijay) known_hosts file.

Use the following command for the same.

5. If you encounter an error as following :

Then first move to the root user(bijoy) first using the "su - bijoy" command.

6. Then install the openssh server using the following command.

Openssh server will be installed. Then try the "ssh localhost" command.

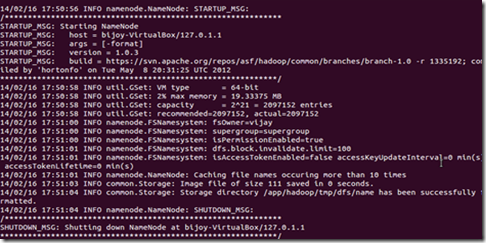

7. It will ask for a new SSH password (give one) and give a result like :

DISABLE IPV6

In Ubuntu using 0.0.0.0 for the various networking-related Hadoop configuration options will result in Hadoop binding to the IPV6 addresses of the ubuntu box. So there is no practical point in enabling IPV6 on a box when you are not connected to any IPV6 network . Hence it should be disabled.

1. To disable IPV6 on Ubuntu , open /etc/sysctl.conf in an editor using the following command :

2. Add the following lines to the end of the file:

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

Press CTRL+X , save the file and exit from the editor.

3. For the effect you need to restart the VM. So restart.

4. After restarting, to check whether IPV6 has been disabled or not use the following command :

If it show value as 1 , then IPV6 has been sucessfully disabled .

HADOOP INSTALLATION

1. Download Hadoop from the link given above.

2. Transfer the Hadoop 1.0.3. tar file in a pen drive.

3. Now go to the VM and open the DEVICES menu and click on the pen drive. It will be detected by ubuntu.

4. Open the pendrive and right click and copy the Hadoop tar file and paste it in the Ubuntu HOME directory.

5. Now move to the terminal and make a directory for hadoop in location of your choice with the following command :

6. Now move the hadoop tar file from Home directory to the hadoop directory that you created in the last step.

7. Now move to the hadoop folder and extract the contents of the Hadoop 1.0.3 tar file using the following command.

8. Now go to the path "/usr/local" give the ownership of the hadoop folder to the hadoop user(vijay) execute the following command.

UPDATING $HOME/.bashrc

1. Open the $HOME/.bashrc file of the hadoop user(vijay).

2. Add the following lines at the end of the $HOME/.bashrc file and save it.

CONFIGURATION

1. Firstly we have to set our JAVA_HOME in the hadoop-env.sh file.

2. Open /usr/local/hadoop/hadoop-1.0.3/conf/hadoop-env.sh file in an editor.

3. Find the statement "The java implementation to use. Required"

Change the Java_Home to /usr/lib/jvm/java-7-oracle/

4. Save the file and exit.

5. Then we will configure the directory where Hadoop will store it's data files. Our setup will use Hadoop Distributed File System(HDFS) , even though it is a single nodecluster.

6. Now we create the directory and set the required ownership and permissions.

7. Move to the root user(here bijoy) using su - bijoy and providing the password.

8. Use the following command to create the directory and provide permissions.

9. Now move to the following path as hadoop user(vijay) using su - vijay.

/usr/local/hadoop/hadoop-1.0.3/conf

10. Open the core-site.xml file and add the following snippet between the <configuration> </configuration> tags.

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>

The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the configproperty (fs.SCHEME.impl)naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description>

</property>

11. Open the mapred-site.xml file and add the following snippet between the <configuration> </configuration> tags.

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task.</description>

</property>

12. Open the hdfs-site.xml and add the following snoppet between the <configuration> </configuration> tags.

<property>

<name>dfs.replication</name>

<value>1</value>

<description>Default block replication. The actual number of replications can be specified when the file is created.The default is used if replication is notspecified in create time.

</description>

</property>

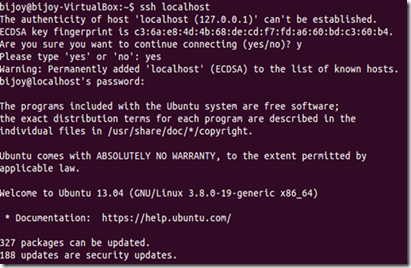

FORMATTING THE FILESYSTEM VIA NAMENODE

The first step to start your Hadoop installation is to format the Hadoop File System which is implemented on the top of your local filesystem of your cluster. This isrequired for the first time you setup your Hadoop Cluster.

1. To format the filesystem run the following command :

The output will look like this :

GIVE A KICK START TO YOUR HADOOP SINGLE NODE CLUSTER

1. To start your Hadoop Cluster execute the following command :

2. The output will be like :

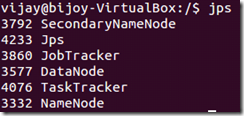

3. We can check whether the expected process are running or not using JPS.

4. We can also check whether Hadoop is listening to the configured ports using netstat.

5. For stopping all daemons running on your machine the following command is to be executed:

6. Make two folders in the HDFS path . One for input files and one for output files.

7. Check the HDFS folders created.

8. Now while any processing in your Hadoop Cluster put the input files in the input-data directory and specify the output path as output-data directory.

ENJOY THE HADOOP WEB INTERFACE

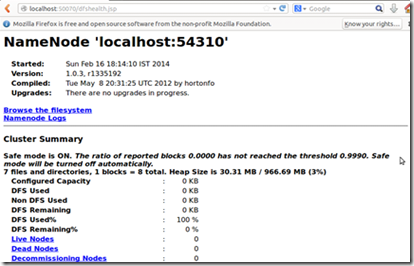

1. The namenode UI shows you a cluster summary including information about total/remaining capacity, live and dead nodes. Aditionally, it allows you to browse theHDFS namespace and view the contents of its files in the web browser. It also gives access to the local machine's Hadoop log files.

By default it is available at http://localhost:50070/

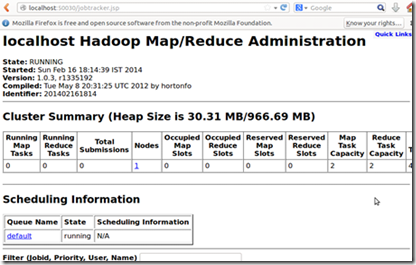

2. The JobTracker Web UI provides information about general job statistics of the Hadoop Cluster, running/completed/failed jobs and a job history log file. It alsogives access to the "local machine's " Hadoop log files (the machine in which the web UI is running on).

By default it is available at http://locahost:50030/

3. The task tracker web UI shows you running and non-running tasks. It also gives access to the "local machine's" Hadoop log files.

By default it is available at http://localhost:50060/

So , here is your Single-node Hadoop Cluster ready for use.

Very soon I will come back with the tutorial to convert this Single-node Cluster to a multi-node Hadoop Cluster.

Please provide your valuable feedback if you find this tutorial useful.

Thanks & Regards

Bijoy Kumar Khandelwal

System Engineer,Big Data, Infosys Limited

Very Informative dude. Job well done.

ReplyDelete@Ashwin Thanks a lot dude

DeleteSooperb :)

ReplyDelete@Abhijit Thanks a lot

DeleteSuper :) .....could you please put up direct download link of pig,hive,hbase,zookeeper,sqoop,flume

ReplyDelete@vinay Thanks a lot.. Will soon post all the links that u require... looking forward for your valuable inputs

Deletevery informative.. and detailed.. great work

ReplyDeleteI get a lot of great information here and this is what I am searching for Hadoop. Thank you for your sharing. I have bookmark this page for my future reference.Thanks so much for the work you have put into this post

ReplyDeleteHadoop Training in hyderabad

tanx man

ReplyDeleteUniqe informative article and of course True words, thanks for sharing. Today I see myself proud to be a hadoop professional with strong dedication and will power by blasting the obstacles. Thanks to hadoop course in adyar

ReplyDeleteVideos and Slides presented on this blog are referred to me by my instructor at hadoop online training center. Also we as a group never miss a single post on this website. Thanks for sharing good info..

ReplyDeleteUsing big data analytics may give the companies many fruitful results, the findings can be implemented in their business decisions so as to minimize their risk and to cut the costs.

ReplyDeletehadoop training in chennai|big data training|big data training in chennai

Thank you for posting this exclusive post for our vision.

ReplyDeleteBig Data Hadoop Training In Chennai | Big Data Hadoop Training In anna nagar | Big Data Hadoop Training In omr | Big Data Hadoop Training In porur | Big Data Hadoop Training In tambaram | Big Data Hadoop Training In velachery